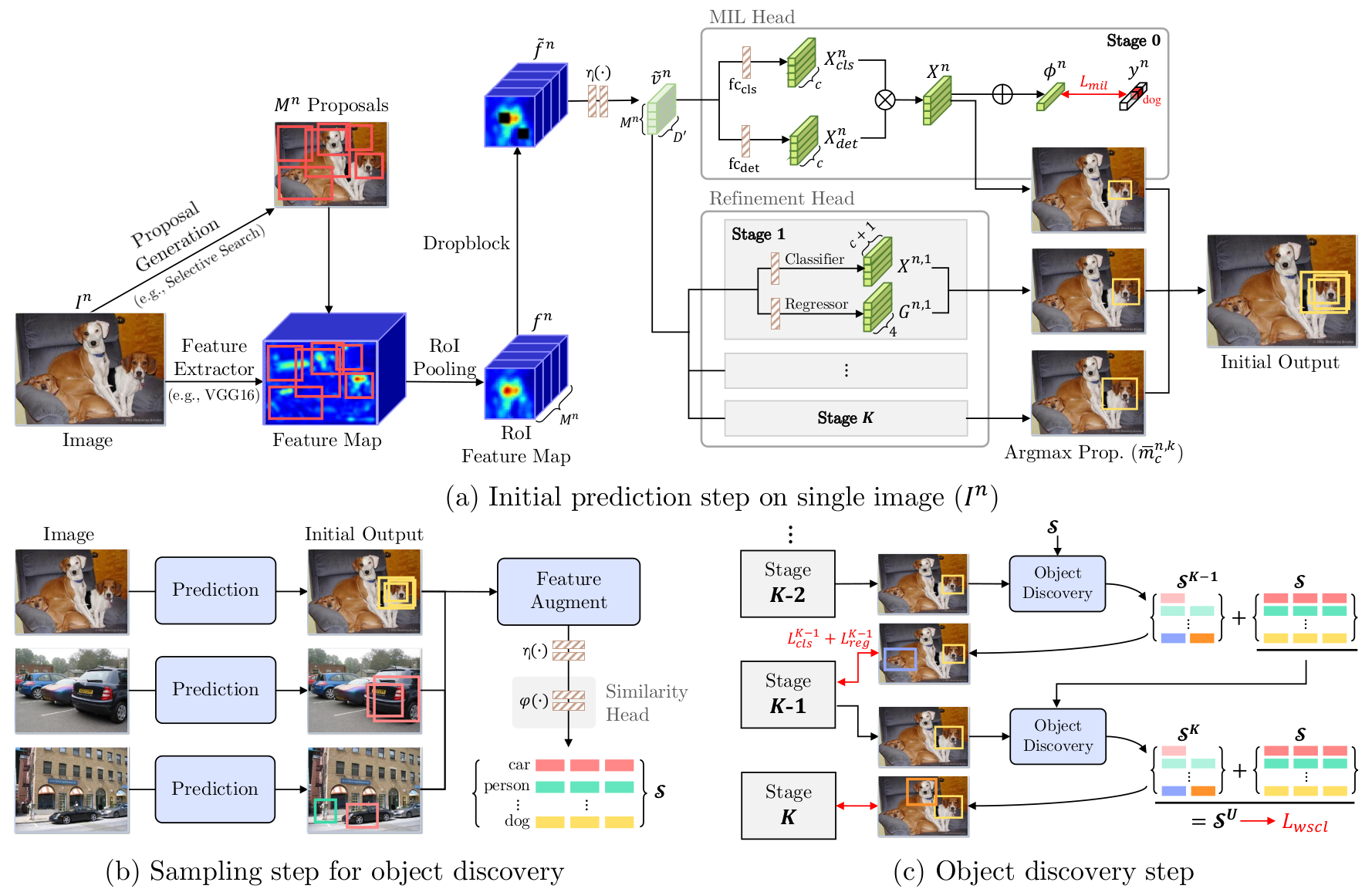

Method

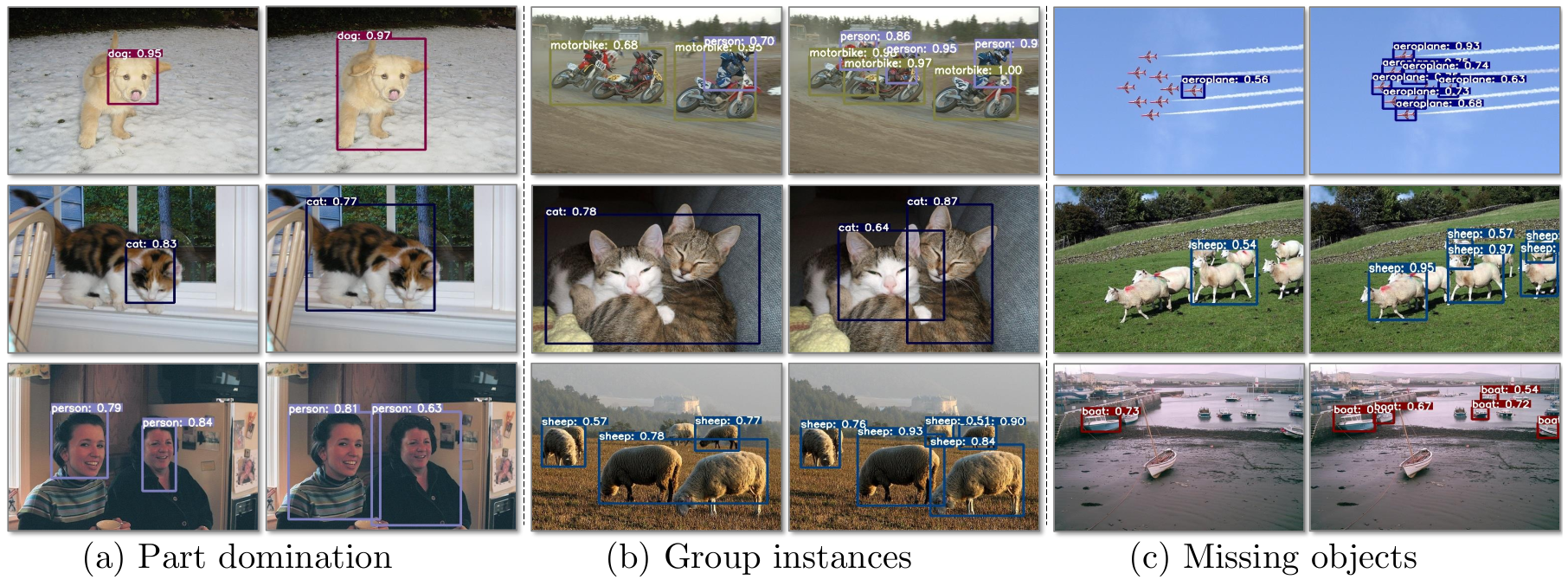

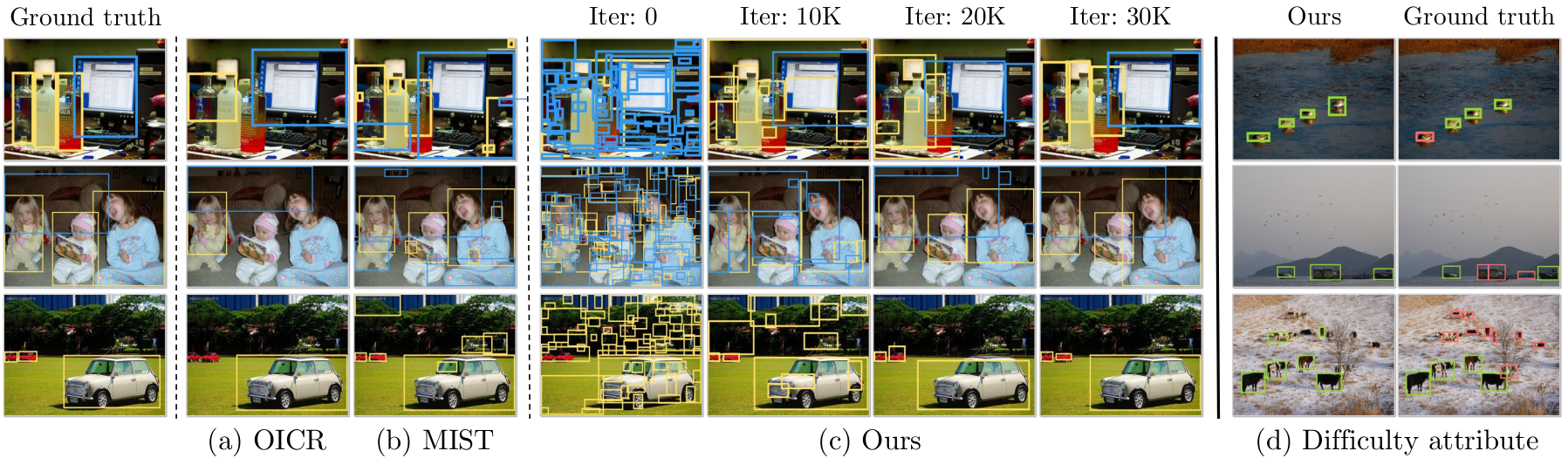

We introduce a novel multiple instance labeling method which addresses the limitations of current labeling methods in WSOD. Our proposed object discovery module explores all proposed candidates using a similarity measure to the highest-scoring representation. We further suggest a weakly supervised contrastive loss (WSCL) to set a reliable similarity threshold. WSCL encourages a model to learn similar features for objects in the same class, and to learn discriminative features for objects in different classes. To make sure the model learn appropriate features, we provide a large number of positive and negative instances for WSCL through three feature augmentation methods suitable for WSOD.